How to remove duplicate records from Flat File Source (SSIS)

April 21, 2010 by Munish Bansal

Problem:

Since populating the data warehouse or any other database may involve various disparate source-types like SQL Server, Excel spreadsheets, DB2 etc. It can also be a Flat File/CSV file source as well.

In case of data sources like SQL Server, Oracle wherein we generally get the data by querying the tables, views etc, we can actually put the logic to remove the duplicate probably by selecting the DISTINCT values or so. But in case of a Flat File data source we don’t have much control in terms of querying the data from it and still we want the duplicate records to be removed before loading them into the target database/file.

Solution:

Let’s take an example where we have a flat file with four columns (A,B,C,D) and some records (100,200,300,400 etc) as shown below:

Source File

And now say we want all of the columns to be loading into the target database, but with duplicate records (lines) removed.

Approach:

Steps:

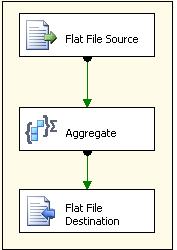

1. Configure the Flat File Source as we required by selecting the file, delimiters (columns & header) etc.

2. Now drag ‘Aggregate’ transformation following Flat File Source and configure it to have ‘Group By’ on the deciding columns/fields for the record to be unique.

3. Configure destination by mapping the columns from Aggregate transformation to the target database/file.

Run the package, and you will see no duplicate record inserted into the target database/file.

Dest File

ReplyDeleteHey thanks for wonderful post.I really like this blog,its very intresting for more information please visit to

Duplicate Records